Install, configure, and run Hadoop 2.2.0 on Mac OS X

I’ve installed hadoop 2.2.0 and set everything up (for a single node) according to the tutorial here: Hadoop YARN Installation.However, I couldn’t get hadoop running.

I

think my problem is that I can’t connect to my localhost, but I’m not quite sure why. I’ve spent about 10 hours installing, googling, and hating open source software installation guides, so I’m now turning to a place that never disappoints me.

Because a picture is worth a thousand words, I give you my settings… In many Words images:

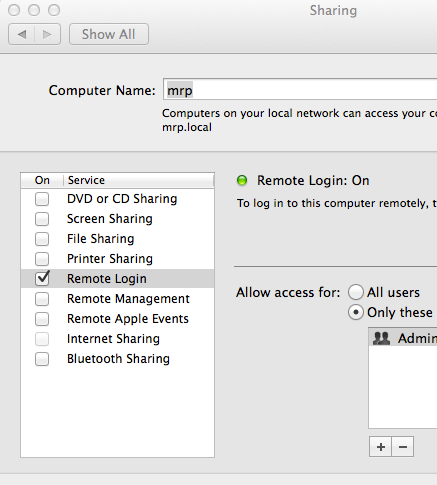

Basic profile/settings

I’m running Mac OS X (Mavericks 10.9.5).

Whatever it’s worth, here is my /etc/hosts file:

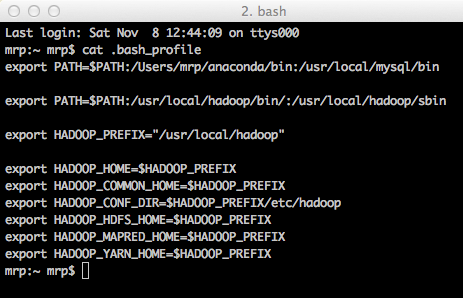

My bash config file:

Hadoop file configuration

Settings for core-site.xml and hdfs-site.xml:

Note: I’ve created the folder in the location you saw above

Settings for my yarn-site.xml:

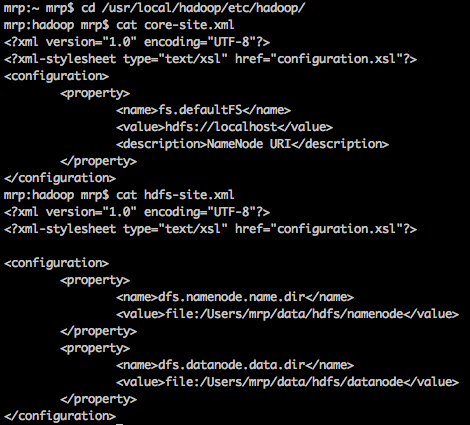

Settings for my hadoop-env.sh file:

Side note

Before I show the results of running start-dfs.sh, start-yarn.sh, check what jps is running, remember I have a hadoop pointing to hadoop-2.2.0.

Start Hadoop

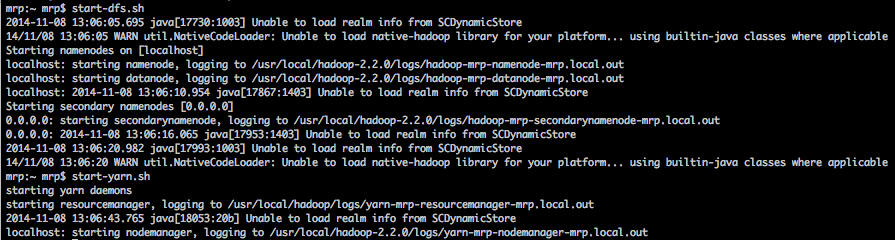

Now, this is what happens when I start the daemon:

For those who don’t have a microscope (which looks very small in the preview of this post), this is the code block shown above:

mrp:~ mrp$ start-dfs.sh

2014-11-08 13:06:05.695 java[17730:1003] Unable to load realm info from SCDynamicStore

14/11/08 13:06:05 WARN util. NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Starting namenodes on [localhost]

localhost: starting namenode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-mrp-namenode-mrp.local.out

localhost: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-mrp-datanode-mrp.local.out

localhost: 2014-11-08 13:06:10.954 java[17867:1403] Unable to load realm info from SCDynamicStore

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-mrp-secondarynamenode-mrp.local.out

0.0.0.0: 2014-11-08 13:06:16.065 java[17953:1403] Unable to load realm info from SCDynamicStore

2014-11-08 13:06:20.982 java[17993:1003] Unable to load realm info from SCDynamicStore

14/11/08 13:06:20 WARN util. NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicablemrp:~ mrp$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop/logs/yarn-mrp-resourcemanager-mrp.local.out

2014-11-08 13:06:43.765 java[18053:20b] Unable to load realm info from SCDynamicStore

localhost: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-mrp-nodemanager-mrp.local.out

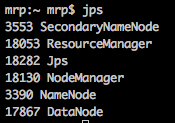

Check what’s running:

Timeout

Good. So far, I think, so good. At least according to all the other tutorials and posts, this looks good. I think.

Before I try to do anything fancy, I just want to see if it works and then run a simple command like hadoop fs -ls.

Failure

When I run hadoop fs -ls, this is what I get:

Similarly, if you can’t see that photo, it will say:

2014-11-08 13:23:45.772 java[18326:1003] Unable to load realm info from SCDynamicStore

14/11/08 13:23:45 WARN util. NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

ls: Call From mrp.local/127.0.0.1 to localhost:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

I tried running other commands but got the same basic error at the beginning :

Call From mrp.local/127.0.0.1 to localhost:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

Now, I’ve visited that site mentioned, but honestly, everything in that link makes no sense to me. I don’t understand what I should do.

I would be very grateful for any help. You’ll make me the happiest Hadooper ever.

… This goes without saying, but it’s clear that I’d be happy to edit/update more information if needed. Thanks!

Solution

Add these to the .bashrc

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"