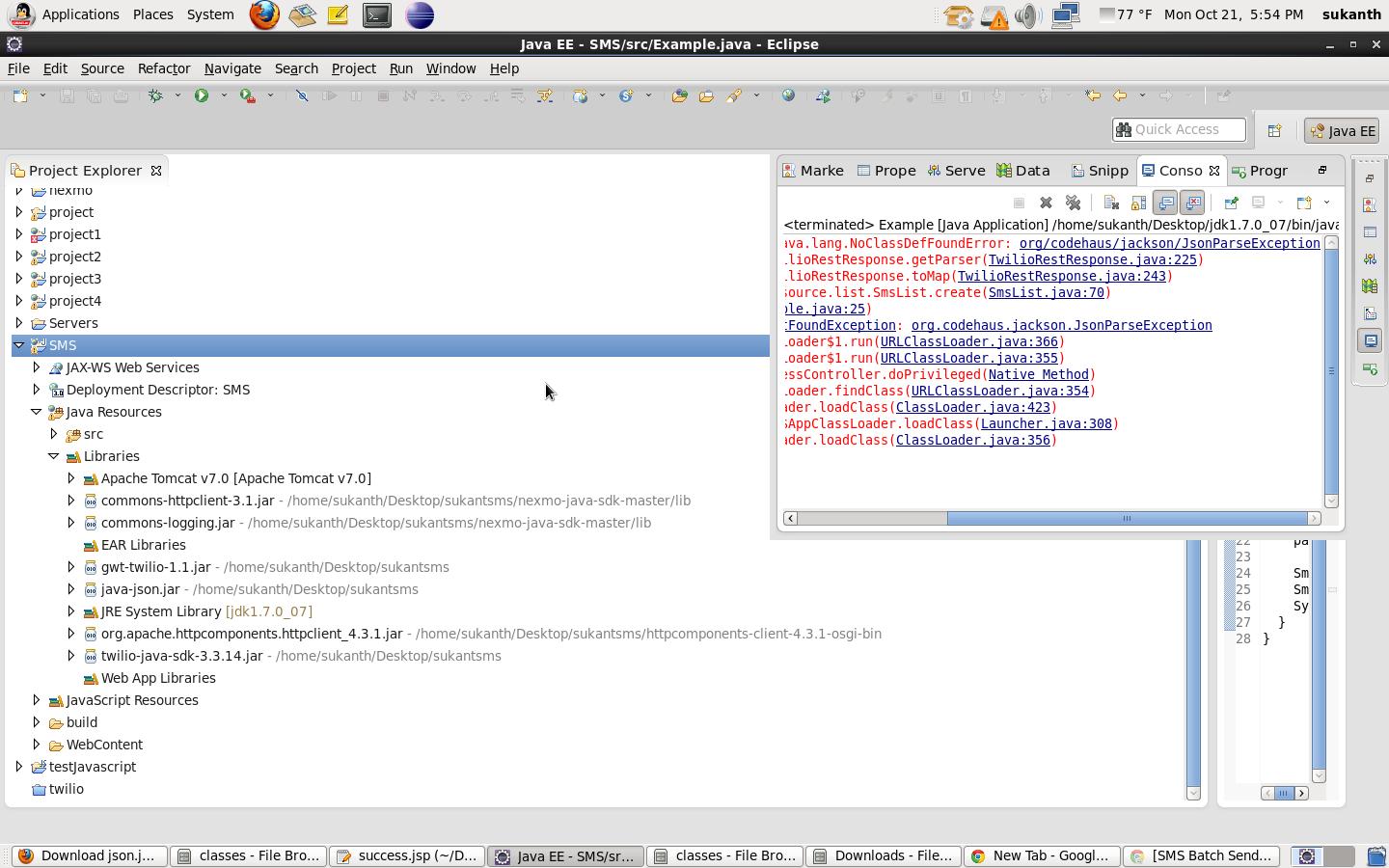

thread “main” java.lang.NoClassDefFoundError : Exception in org/apache/hadoop/hbase/HBaseConfiguration

I’m using Hadoop 1.0.3 and HBase 0.94.22. I’m trying to run a mapper program to read values from an Hbase table and output them to a file. I get the following error:

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/hbase/HBaseConfiguration

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:340)

at org.apache.hadoop.util.RunJar.main(RunJar.java:149)

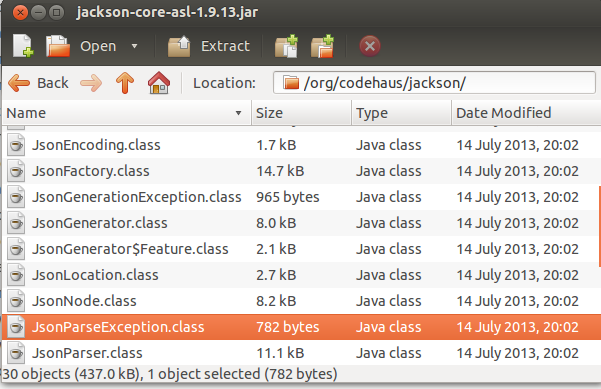

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hbase.HBaseConfiguration

at java.net.URLClassLoader$1.run(URLClassLoader.java:372)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:360)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

The code is as follows

import java.io.IOException;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.filter.FirstKeyOnlyFilter;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class Test {

static class TestMapper extends TableMapper<Text, IntWritable> {

private static final IntWritable one = new IntWritable(1);

public void map(ImmutableBytesWritable row, Result value, Context context) throws IOException, InterruptedException

{

ImmutableBytesWritable userkey = new ImmutableBytesWritable(row.get(), 0 , Bytes.SIZEOF_INT);

String key =Bytes.toString(userkey.get());

context.write(new Text(key), one);

}

}

public static void main(String[] args) throws Exception {

HBaseConfiguration conf = new HBaseConfiguration();

Job job = new Job(conf, "hbase_freqcounter");

job.setJarByClass(Test.class);

Scan scan = new Scan();

FileOutputFormat.setOutputPath(job, new Path(args[0]));

String columns = "data";

scan.addFamily(Bytes.toBytes(columns));

scan.setFilter(new FirstKeyOnlyFilter());

TableMapReduceUtil.initTableMapperJob("test",scan, TestMapper.class, Text.class, IntWritable.class, job);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

System.exit(job.waitForCompletion(true)?0:1);

}

}

I export the above code

to a jar file and run the above code on the command line with the following command.

Hadoop jar/home/testdb.jar test

where test is the folder where mapper results should be written.

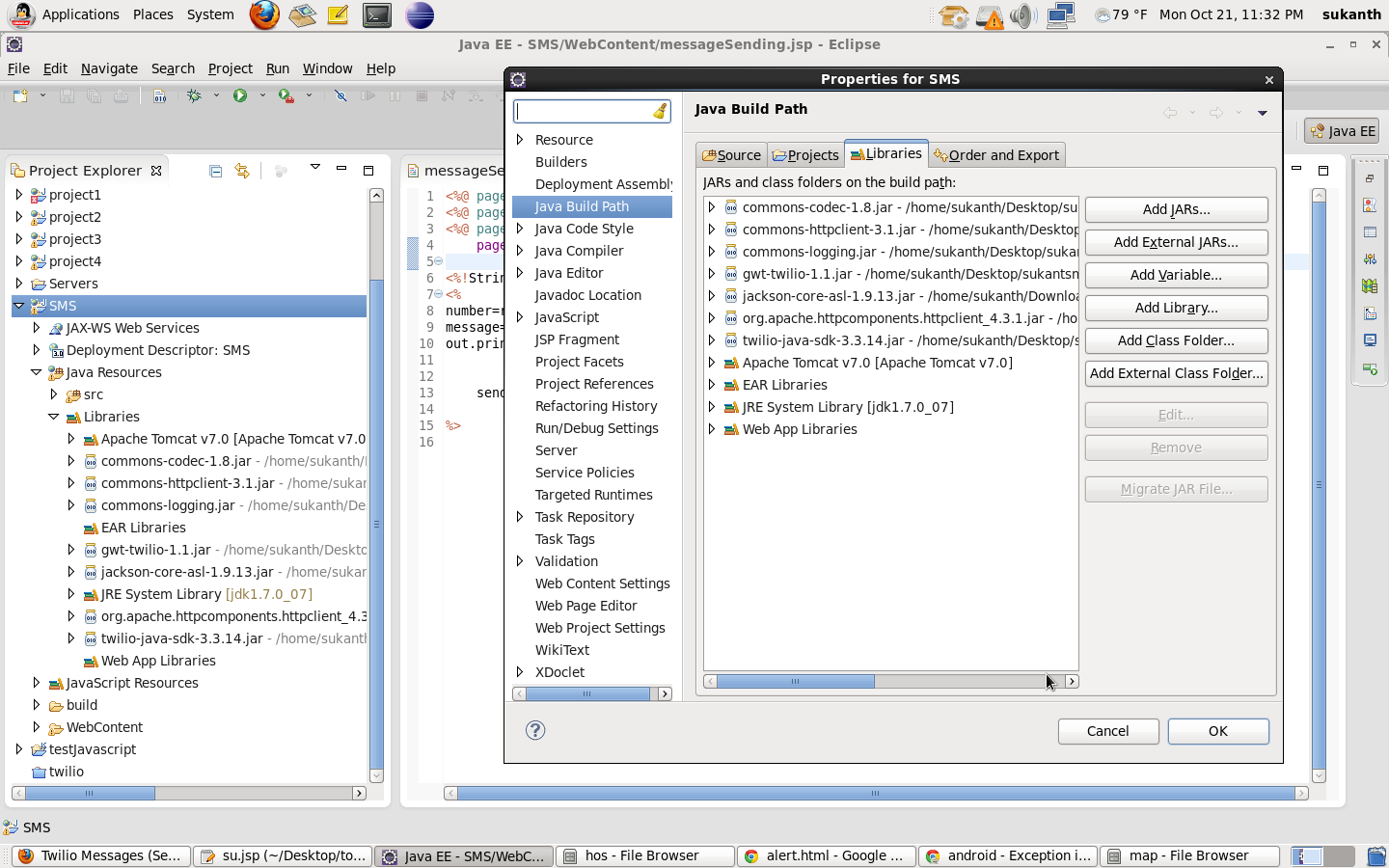

I checked some other links like Caused by: java.lang.ClassNotFoundException: org.apache.zookeeper.KeeperException It is recommended to include the zookeeper file in the classpath, but when creating the project in Eclipse, I have included the zookeeper file in the lib directory of hbase. The file I’m including is zookeeper-3.4.5.jar. Ans also visited this link HBase – java.lang.NoClassDefFoundError in java, but I’m using the mapper class to get values from hbase tables instead of getting values from any client API. I know I made a mistake somewhere, can you guys help me?

I

noticed another strange thing when I removed the first line in the main function except “HBaseConfiguration conf = new HBaseConfiguration(); “, then export the code to a jar file and try to compile that jar file for hadoop jar test.jar I still get the same error. It looks like I’m not defining the conf variable correctly, or there’s something wrong with my environment.

Solution

I

got a fix for the issue where I didn’t add the hbase classpath in the hadoop-env.sh file. Here’s what I added to make things work.

$ export HADOOP_CLASSPATH=$HBASE_HOME/hbase-0.94.22.jar:\

$HBASE_HOME/hbase-0.94.22-test.jar:\

$HBASE_HOME/conf:\

${HBASE_HOME}/lib/zookeeper-3.4.5.jar:\

${HBASE_HOME}/lib/protobuf-java-2.4.0a.jar:\

${HBASE_HOME}/lib/guava-11.0.2.jar