Map Reduce process in Hadoop

I

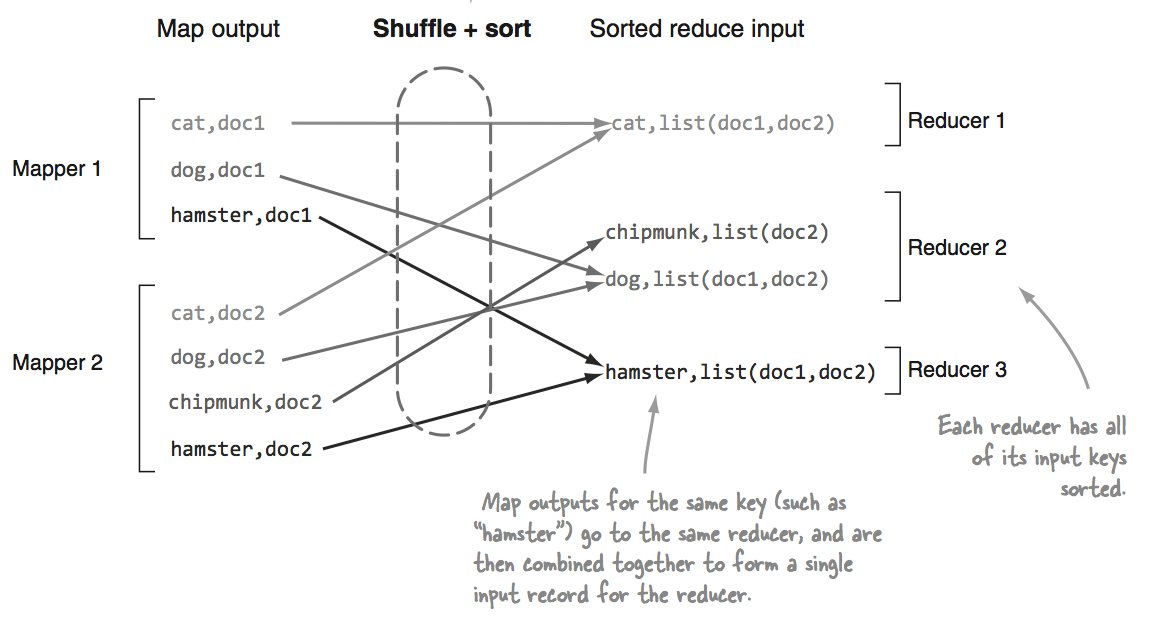

was learning Hadoop using the book Hadoop in Practice, and while reading Chapter 1, I saw this chart:

From the Hadoop documentation: ( http://hadoop.apache.org/docs/current2/api/org/apache/hadoop/mapred/Reducer.html )

1. Shuffle

Reducer is input the grouped output of a Mapper. In the phase the framework, for each Reducer, fetches the relevant partition of the output of all the Mappers, via HTTP.

2. Sorting

The framework groups Reducer inputs by keys (since different Mappers may have output the same key) in this stage.

The shuffle and sort phases occur simultaneously i.e. while outputs are being fetched they are merged.

While I know that shuffle and sorting happen at the same time, it’s not clear to me how the framework decides which reducer receives which mapper output. From the docs, it seems like every reducer has a way of knowing which map output to collect, but I don’t understand how.

So my question is, given the mapper output above, is the end result always the same for each reducer? If so, what are the steps to achieve this result?

Thanks for any clarification!

Solution

It is Partitioner This determines how the output of the mapper is assigned to different reducers.

Partitioner controls the partitioning of the keys of the intermediate map-outputs. The key (or a subset of the key) is used to derive the partition, typically by a hash function. The total number of partitions is the same as the number of reduce tasks for the job. Hence this controls which of the m reduce tasks the intermediate key (and hence the record) is sent for reduction.